1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

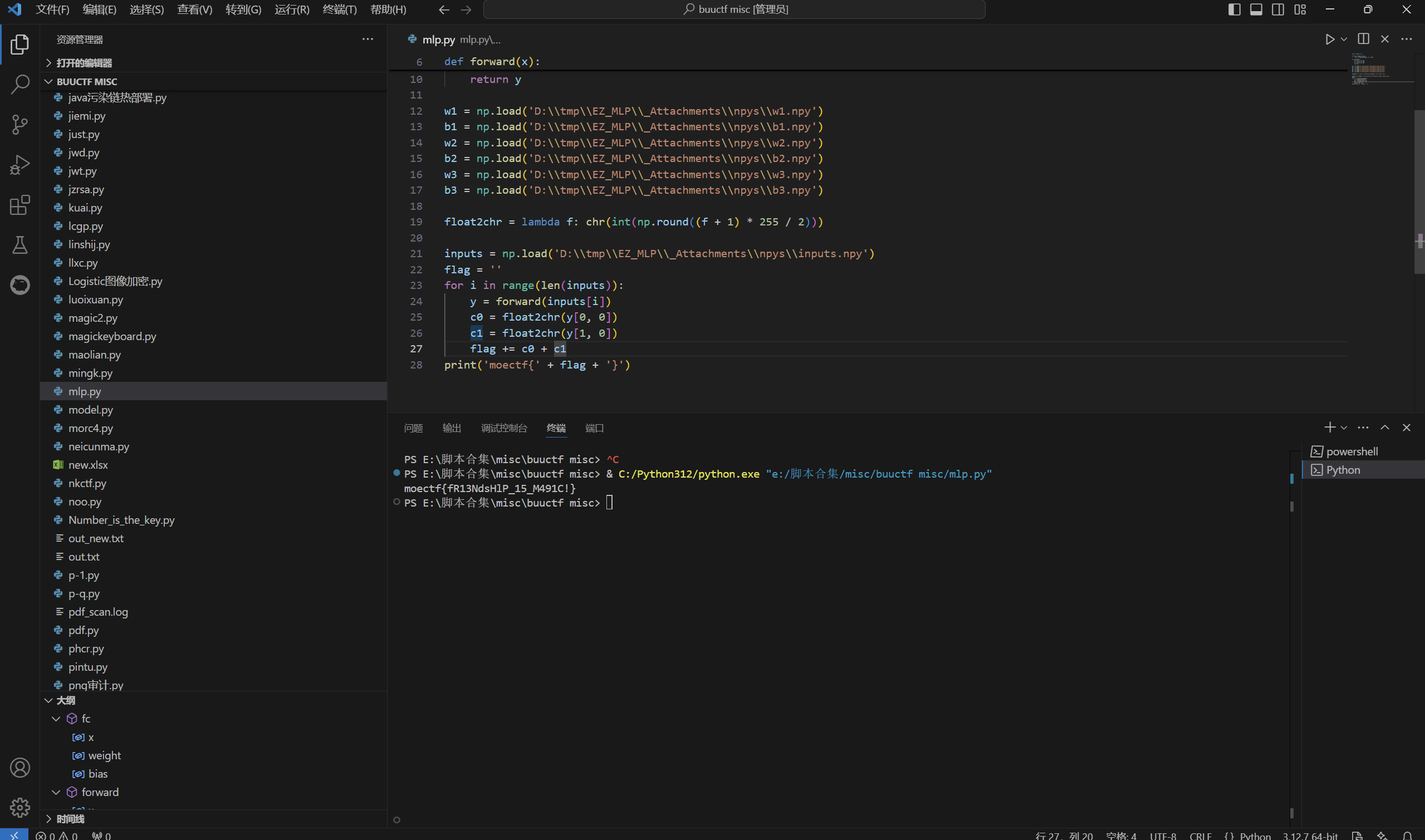

| import torch

import numpy as np

from model import Net

def inv_fc(y, weight, bias, epsilon=1e-8):

"""

全连接层的逆运算

使用伪逆矩阵解决非方阵问题

添加epsilon防止数值不稳定

"""

# 添加微小值防止奇异矩阵问题

weight_pinv = torch.linalg.pinv(weight, rtol=epsilon)

return torch.matmul(y - bias, weight_pinv.T)

def inv_sigmoid(y, epsilon=1e-8):

"""

Sigmoid激活函数的逆运算(logit函数)

添加epsilon防止除零错误和数值溢出

"""

# 限制在(0,1)范围内防止NaN

y_clamped = torch.clamp(y, epsilon, 1 - epsilon)

return torch.log(y_clamped / (1 - y_clamped))

def float2chr(f):

"""将[-1,1]范围内的浮点数转换为字符"""

# 确保值在有效范围内

normalized = np.clip((f + 1) / 2.0, 0, 1)

# 四舍五入到最接近的整数

int_val = int(np.round(normalized * 255))

# 确保在ASCII范围内

return chr(max(32, min(126, int_val)))

def calculate_flag(checkpoint_path='_Checkpoint.pth'):

"""

通过模型逆运算计算flag张量

返回flag_tensor和验证结果

"""

try:

# 加载模型和基础输入

checkpoint = torch.load(checkpoint_path)

net = Net()

net.load_state_dict(checkpoint['model'])

base_input = checkpoint['input']

# 目标输出

intended_out = torch.tensor([2., 3., 3.], dtype=torch.float32)

# 使用命名变量提高可读性

half_scale = net.scale / 2.0

# ===== 反向传播计算 =====

# 1. 逆运算第三层

fc3_input = inv_fc(intended_out, net.fc3.weight, net.fc3.bias)

# 2. 逆运算第二层激活和缩放

# 先反转缩放操作: x = (y + scale/2) / scale

scaled_fc2_output = (fc3_input + half_scale) / net.scale

# 然后反转sigmoid激活

fc2_output = inv_sigmoid(scaled_fc2_output)

# 3. 逆运算第二层

fc2_input = inv_fc(fc2_output, net.fc2.weight, net.fc2.bias)

# 4. 逆运算第一层激活和缩放

scaled_fc1_output = (fc2_input + half_scale) / net.scale

fc1_output = inv_sigmoid(scaled_fc1_output)

# 5. 逆运算第一层

fc1_input = inv_fc(fc1_output, net.fc1.weight, net.fc1.bias)

# 计算flag张量

flag_tensor = fc1_input - base_input

# 验证计算结果

actual_output = net(base_input + flag_tensor)

is_valid = torch.allclose(

actual_output,

intended_out,

atol=1e-5,

rtol=1e-5

)

return flag_tensor, is_valid

except Exception as e:

print(f"计算flag时发生错误: {e}")

return None, False

def verify_and_show_flag(flag_tensor, checkpoint_path='_Checkpoint.pth'):

"""验证并显示flag"""

try:

checkpoint = torch.load(checkpoint_path)

net = Net()

net.load_state_dict(checkpoint['model'])

base_input = checkpoint['input']

# 计算输出

output = net(base_input + flag_tensor)

# 使用容差比较而非精确相等

target = torch.tensor([2., 3., 3.])

is_valid = torch.allclose(

output.detach(),

target,

atol=1e-5,

rtol=1e-5

)

if is_valid:

# 转换为字符串

flag_str = ''.join(float2chr(f) for f in flag_tensor.detach().numpy().ravel())

print('=' * 50)

print('You made this little MLP happy, here\'s his reward:')

print(f'moectf{{{flag_str}}}')

print('=' * 50)

return True

else:

print("验证失败: 输出不匹配")

print(f"预期输出: {target.tolist()}")

print(f"实际输出: {output.detach().tolist()}")

return False

except Exception as e:

print(f"验证过程中发生错误: {e}")

return False

def main():

"""主函数:计算、验证并显示flag"""

# 计算flag张量

flag_tensor, is_calculated = calculate_flag()

if flag_tensor is None or not is_calculated:

print("无法计算有效的flag张量")

return

# 验证并显示flag

if not verify_and_show_flag(flag_tensor):

print("Flag验证失败")

# 保存flag张量(可选)

try:

torch.save(flag_tensor, '_FlagSolution.pth')

print("Flag张量已保存到 '_FlagSolution.pth'")

except Exception as e:

print(f"保存flag张量失败: {e}")

if __name__ == '__main__':

main()

|